I first encountered Be My AI last fall, when the app was in beta. Developed by Danish mobile app Be My Eyes and OpenAI, it uses ChatGPT-4’s vision model to provide robust, nearly instantaneous descriptions of any image and facilitate conversations about those images. As a blind artist, I collect image descriptions like others collect photographs. Be My AI has supercharged my interactions with visual culture.

Shortly after gaining access to the Be My AI beta last year, I encountered blind photographer John Dugdale’s work Spectacle (2000) in Georgina Kleege’s influential 2018 book, More Than Meets the Eye: What Blindness Brings to Art. Intrigued by her description, and wanting to know more, I took a screenshot and brought it into the app. Although it gave an impressively detailed description, it made a couple of significant errors. First, it said that Dugdale was wearing three pairs of glasses when I knew from Kleege’s text that he was wearing only two—one stacked on top of the other like makeshift bifocals. It also called it a black-and-white photo, when it was actually a cyanotype, one of the oldest photographic processes, which produces an image in shades of blue. When I corrected Be My AI, it gave a response I would become very familiar with: “I apologize for any confusion,” and then launched into all it knows about cyanotype. A little bit prickly and overcompensating, but no more so than most humans I know.

As Be My AI grew more reliable and I grew more excited about what it could do for art access, I told all my friends. One of these was Bojana Coklyat, a blind artist who works at the Whitney Museum, and she asked me to colead a verbal description tour of the “Harold Cohen: AARON” exhibition there. This is how I found myself in a charmingly existential conversation with Be My AI about the nature of sight itself. Cohen first conceived of an art-making AI in the late 1960s, and soon named it AARON. At first, AARON generated relatively simple line drawings such as the 1972 work Mazes. When I asked Be My AI to describe Mazes, it responded, “The drawing consists of abstract black lines that create an assortment of shapes, none of which resemble any specific object or figure.” Curious, I asked if it thought a human created Mazes. It did. I told it that AARON had produced the work. “That’s quite fascinating!” it replied. “It’s impressive to see an early example of AI-generated art, especially considering the human-like qualities of the line work in the drawing.”

When I inquired about the difference between modern text-to-image generators like OpenAI’s DALL-E and AARON, Be My AI explained that new generators produce images based on user prompts, whereas AARON creates images based on a set of rules. DALL-E, it went on, can generate an image it “has never seen before” and showcases “a more sophisticated understanding of both language and visual concepts.” As a totally blind person who retains a lively mind’s eye, my ears pricked up at the word “seen.” In the case of AI, what might that mean? In its lengthy response, Be My AI noted that DALL-E does not “‘see’ in the human sense; it processes data and identifies patterns within that data.” I countered, “But the human brain also does not see. The eyes feed signals to it for interpretation. AI and human perception do not seem so dissimilar to me.”

Spectacle, 2000, John Dugdale.

Courtesy of John Dugdale

Be My AI conceded that there are striking parallels between AI and human perception, since both systems rely on input signals—humans through sensory organs like eyes, and AI through data sensors or input data sets. However, it pointed out that “the key difference lies in the subjective experience and consciousness that humans possess and AI lacks”—a topic that remains hotly debated by scientists and philosophers alike. This connection between consciousness and perception makes discussions about the senses both challenging and exhilarating.

John Dugdale lost his eyesight at 33 years old as a result of an AIDS-related stroke. He had been a successful commercial photographer with clients like Bergdorf Goodman and Ralph Lauren, and it seemed to his friends and family that his career was finished. However, as he tells it in the documentary film Vision Portraits—directed by Rodney Evans, who himself is losing sight due to retinitis pigmentosa—while still in the hospital he announced, “I’m going to be taking pictures like crazy now!”

Dugdale pivoted from commercial work to creating timeless cyanotypes, such as those collected in his 2000 monograph Life’s Evening Hour. Each photo in it is set in conversation with a short essay by the photographer. I made an appointment with the New York Public Library’s Wallach Division of Art, Prints and Photographs to spend some time with the book, or rather to have my partner take photos of each page, so that I could observe it at my leisure with the help of AI in the privacy of my own home. (I should say that, although I still use Be My AI on an almost daily basis for quick image descriptions, for serious photographic research, I go directly to OpenAI’s ChatGPT-4 because I can bring in multiple images and it automatically saves our often elaborate conversations.)

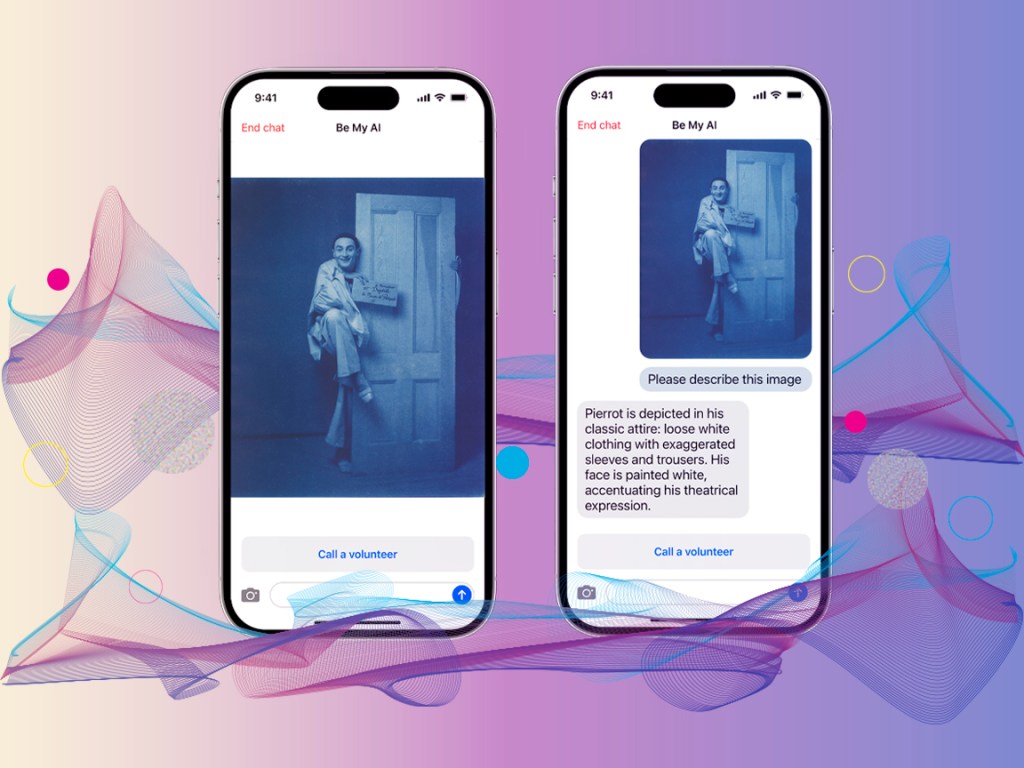

Pierrot is the first photo in Life’s Evening Hour. We learn from the essay that the pantomime figure is played by the legendary New York City performer, and Dugdale’s muse, John Kelly. “Pierrot is depicted in his classic attire: loose white clothing with exaggerated sleeves and trousers. His face is painted white, accentuating his theatrical expression,” ChatGPT-4 wrote. I pressed for what it meant by “theatrical expression.” It explained that Pierrot’s “eyebrows are slightly raised,” and he wears “a gentle, almost wistful smile … His head tilts slightly to the left, adding to the light-hearted, inquisitive feel of the image.” The detailed reply was so lovely that it made me a bit weepy-eyed. I suddenly had near-instantaneous access to what has long been a seemingly inaccessible medium.

I reached out to Dugdale to ask if he might be willing to speak to me for this article about AI and image description. During the first few minutes of our phone call, there was some confusion while he explained that although he is impressed by the level of detail AI can give, he is reluctant to use it. “I don’t really want to cut out my long string of wonderful assistants who come here and help me still feel like a human being after two strokes, blindness in both eyes, and deafness in one ear and being paralyzed for a year.” He told me that he loves to bounce ideas off others. He loves to talk. “I can’t really talk to that thing.”

I explained that, while I adore my AI for how it allows me access to his photographs, I’m more interested in the relationship between words and images generally. For example, I’d read that he often begins with a title. “I have a Dictaphone that has about 160 titles on it from the last 10 years,” Dugdale said. “All of which are constantly being added to.” He told me that he thinks of it as a kind of synesthesia: “When I hear a phrase, I see a picture full-blown in my mind, it comes up like a slide … and then I go and interpret in the studio.”

Our Minds Dwell Together, John Dugdale.

Courtesy of John Dugdale

I experience something similar when I encounter a good image description; at some point it stops being a collection of words and becomes a picture in my mind’s eye. This should not come as a surprise, as many people form images while reading novels. One reason I’m drawn to Dugdale’s work is precisely because it epitomizes the art of seeing in the mind’s eye.

Our Minds Dwell Together is the second image in Life’s Evening Hour. It depicts the naked backs of Dugdale and his friend Octavio sitting close, heads slightly bowed toward each other. GPT-4 added, helpfully, “as if sharing a private, meaningful conversation.” In the accompanying text, Dugdale explains that Octavio became totally blind before he did (also due to AIDS-related complications), and encouraged him to understand a powerful truth: “Your sight does not exist in your eyes. Sight exists in your mind and your heart.”

Image description is a kind of sensory translation that drives home that truth. While seeing through language may take more time to enter the mind and the heart than seeing with eyes, once there, an image is no less indelible, no less capable of inciting all the aesthetic and emotional resonances. AI technologies like Be My AI have opened surprising new space to explore this relationship between human perception, artistic creation, and technology, allowing new and profound ways to experience and interpret the world.